"Perfection is achieved not when there is nothing left to add, but when there is nothing left to take away" Antoine de Saint-Exupéry

"Adding the meaningful and subtracting the obvious" John Maeda

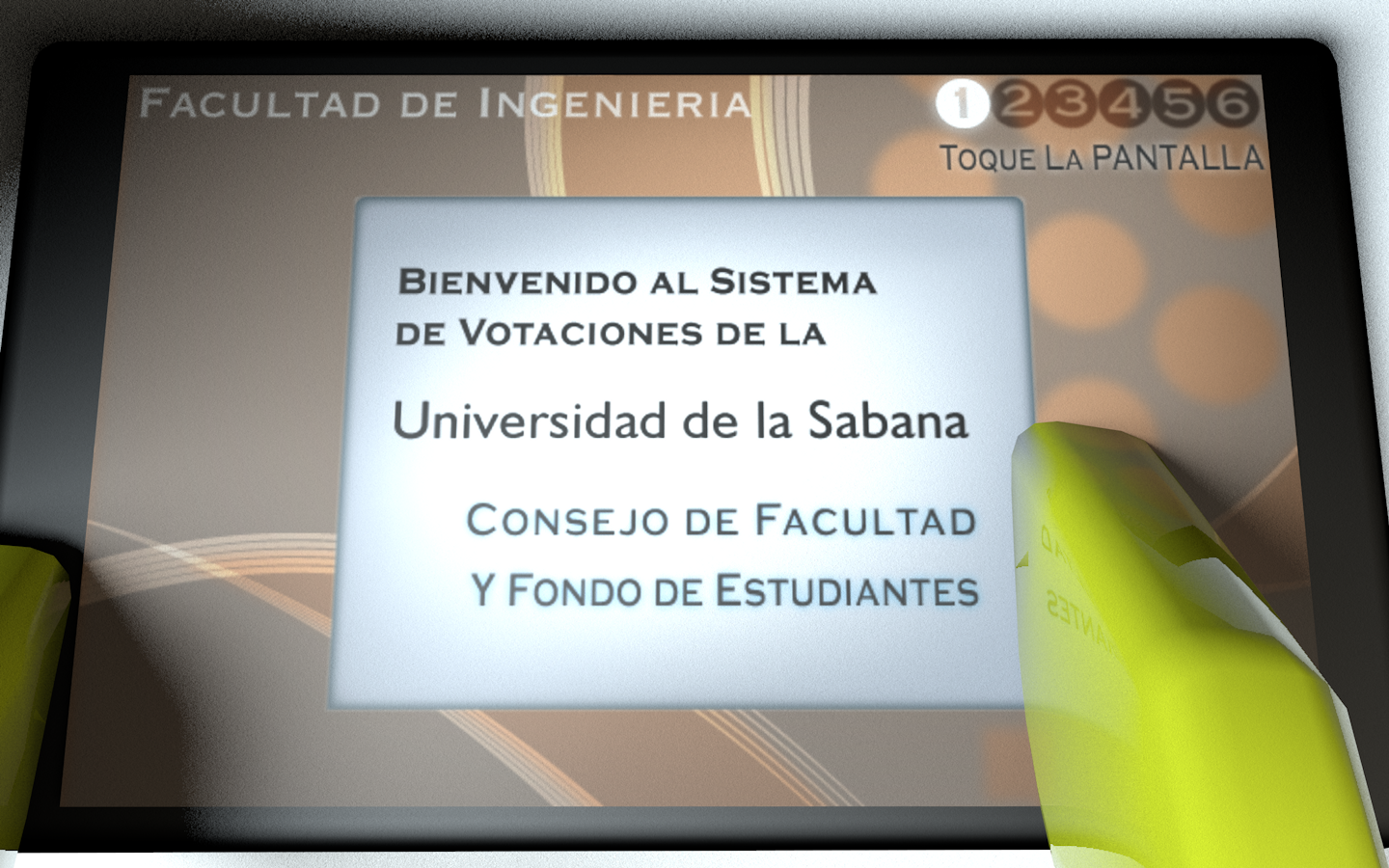

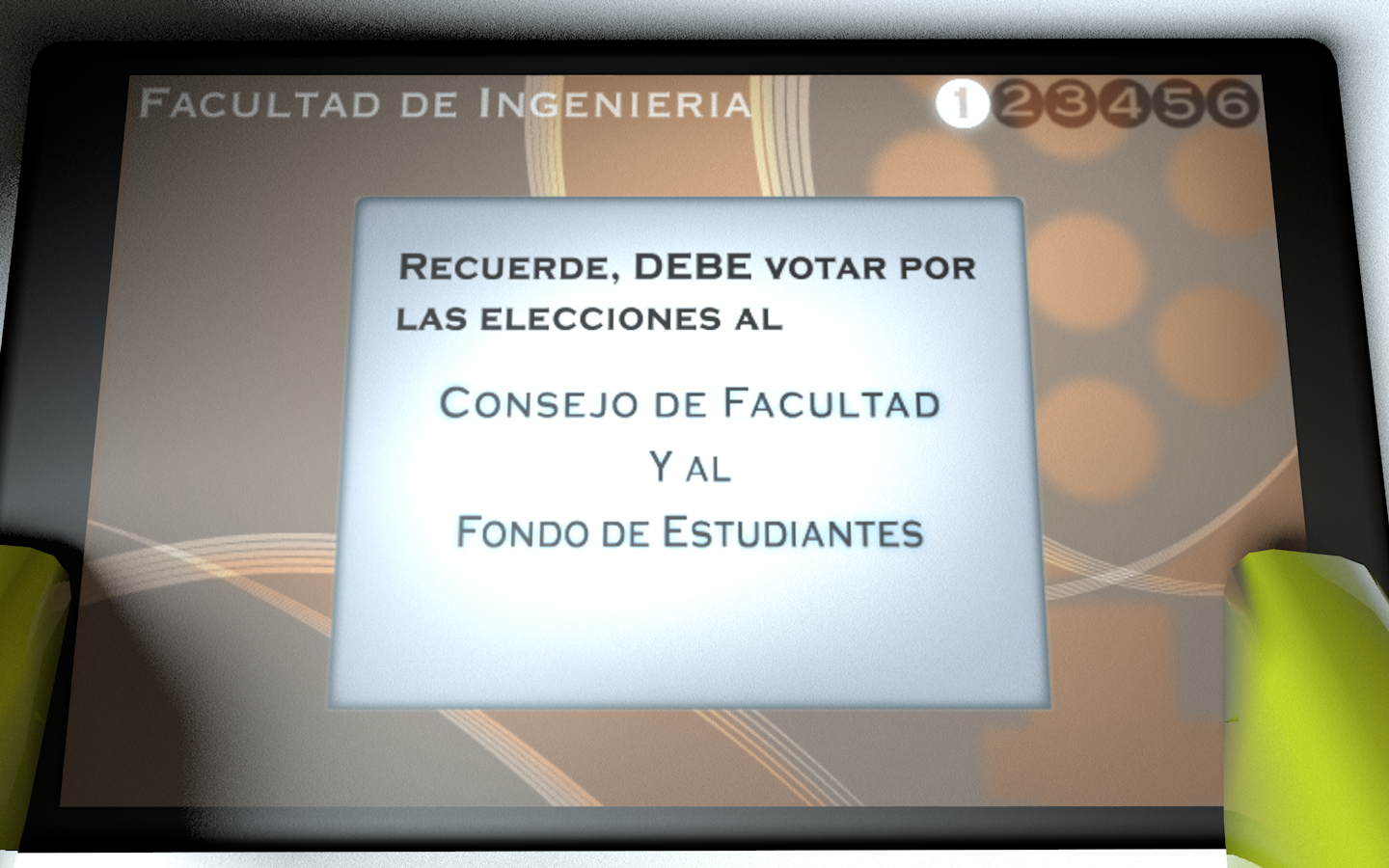

Not long ago I stumble across an article called 7 Design Principles, Inspired By Zen Wisdom. In it, they describe the state mastered through composing with these principles as Shibumi and even though it has no direct translation they explain that its meaning "is reserved for objects and experiences that exhibit in paradox and all at once the very best of everything and nothing: Elegant simplicity. Effortless effectiveness. Understated excellence. Beautiful imperfection." This is the beginning for my pursue towards Shibumi Interactive Experience.

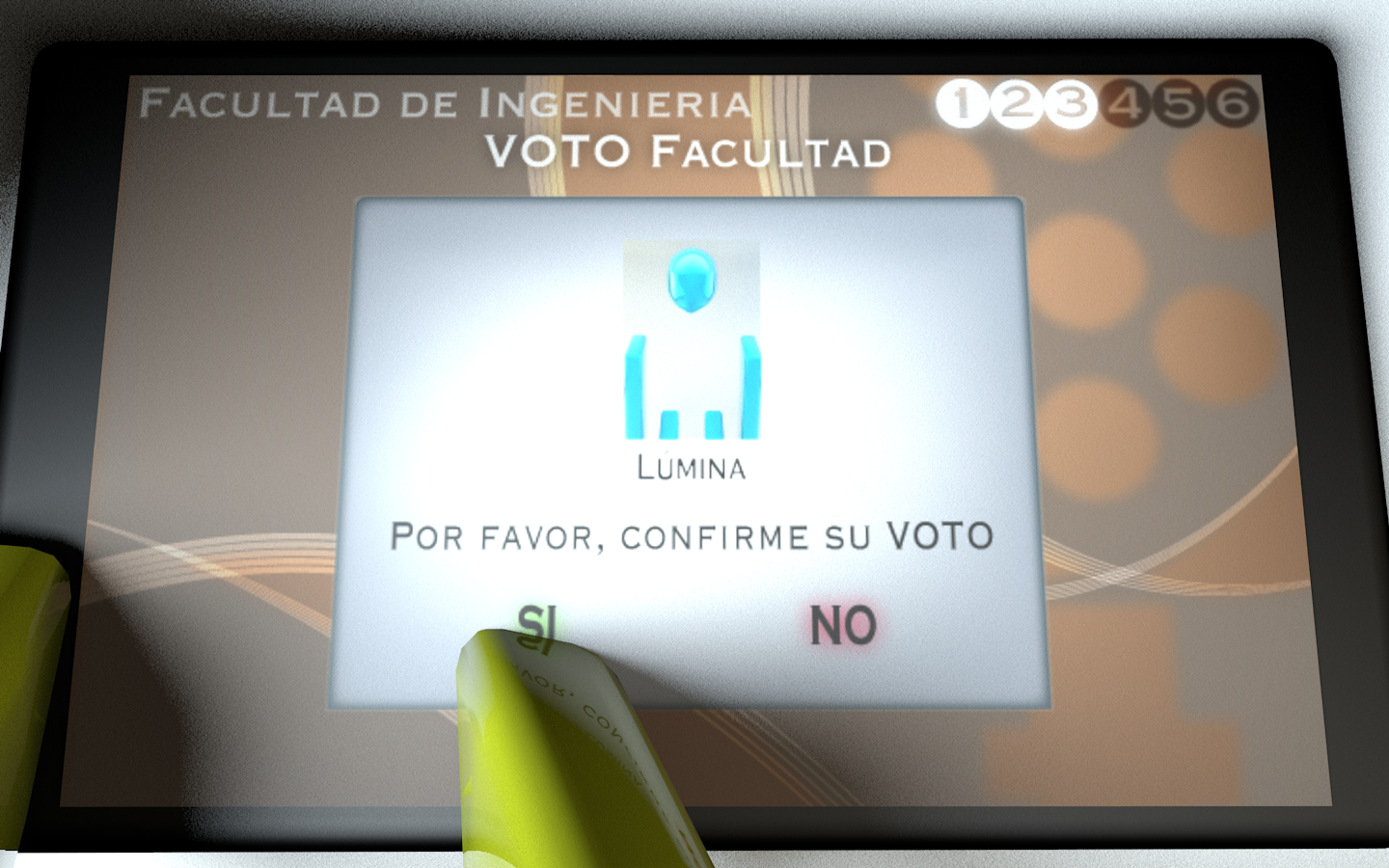

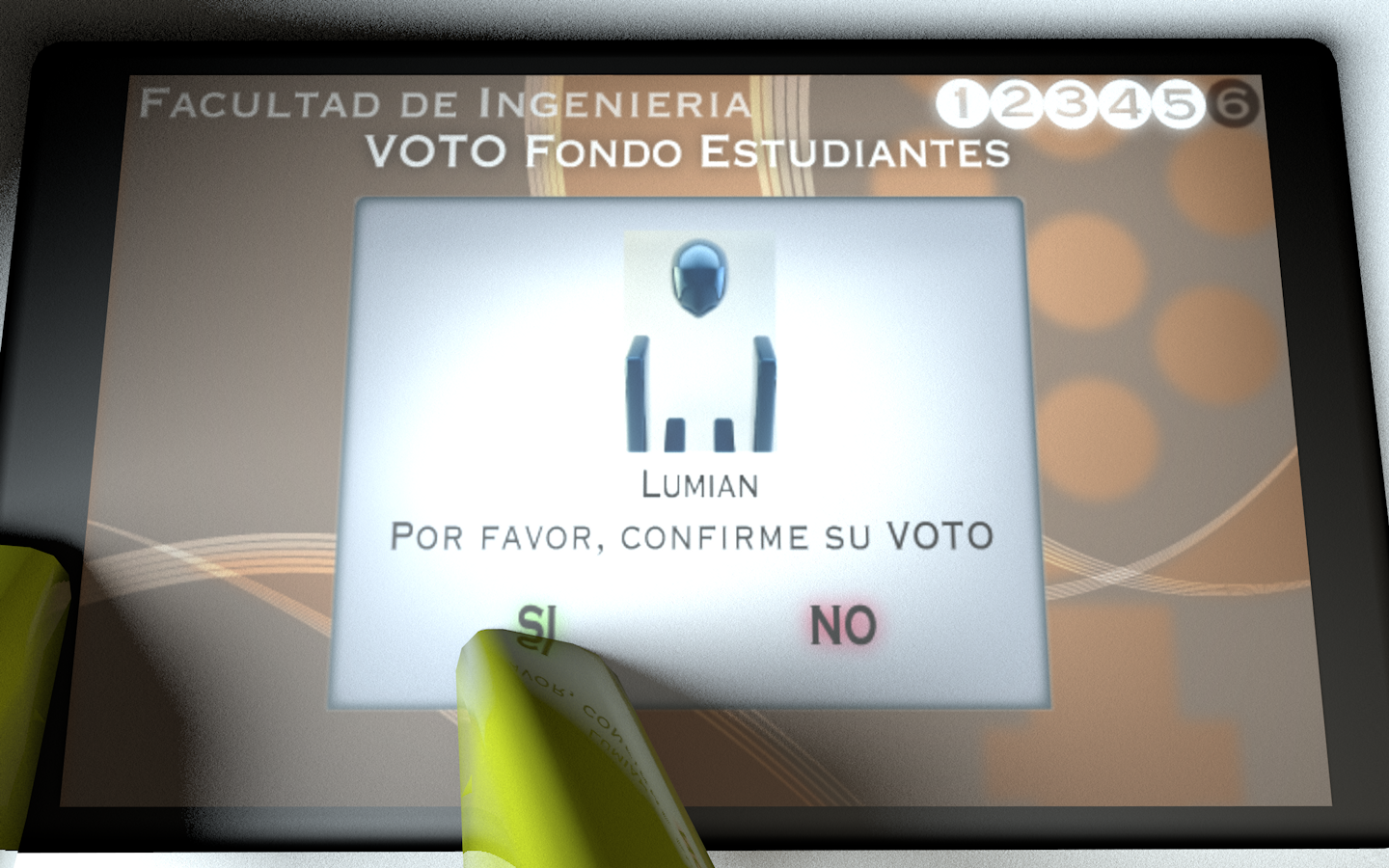

This is why, through the homework's brief I began composing by the first two principles, Austerity and Simplicity. This is why when deciding how to compose the portrait, I wonder what are the least necessary elements to perceive a face. Later on, I looked onto adding depth and that's how the overall size composition and hands came about.

Another trait I explored all through the exercise, was to craft the composition through dynamic dimensions. In other words, how this composition have consistent dimensions, regardless of the device it's being used in. In the end, I noticed whenever you're trying to figure out a coordinate in space, it's more effective –as code-crafting– to modify ratios through floating numbers than by arithmetic operands. This is why, everything is created with width and height variables.

I've also started trying another environment for editing code called Sublime Text 2. I find the auto-suggested functions whenever a character is typed appealing, but what has really stand out from the conventional environment is the function parameters auto-filling.