Generative Soundscape 0.2.1 – IR Comm & Circuit Prototyping

This was an iterative attempt towards a generative soundscape installation. I created everything from UX Design, concepting, PCB design, hardware prototyping, hardware fabrication and ensemble, testing and validating

Deliverables: Hardware prototypes (PCB modules)

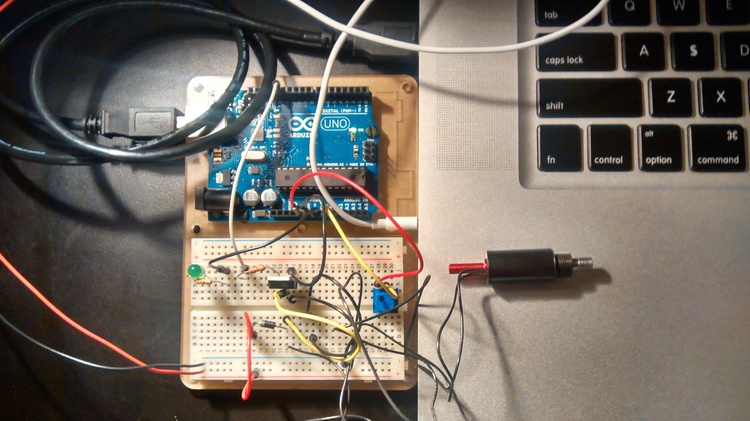

Tools: Arduino, Eagle CAD, Other Mill

Brief

After a failed attempt of creating modules that would communicate through sound, I started looking into Infrared Communication.

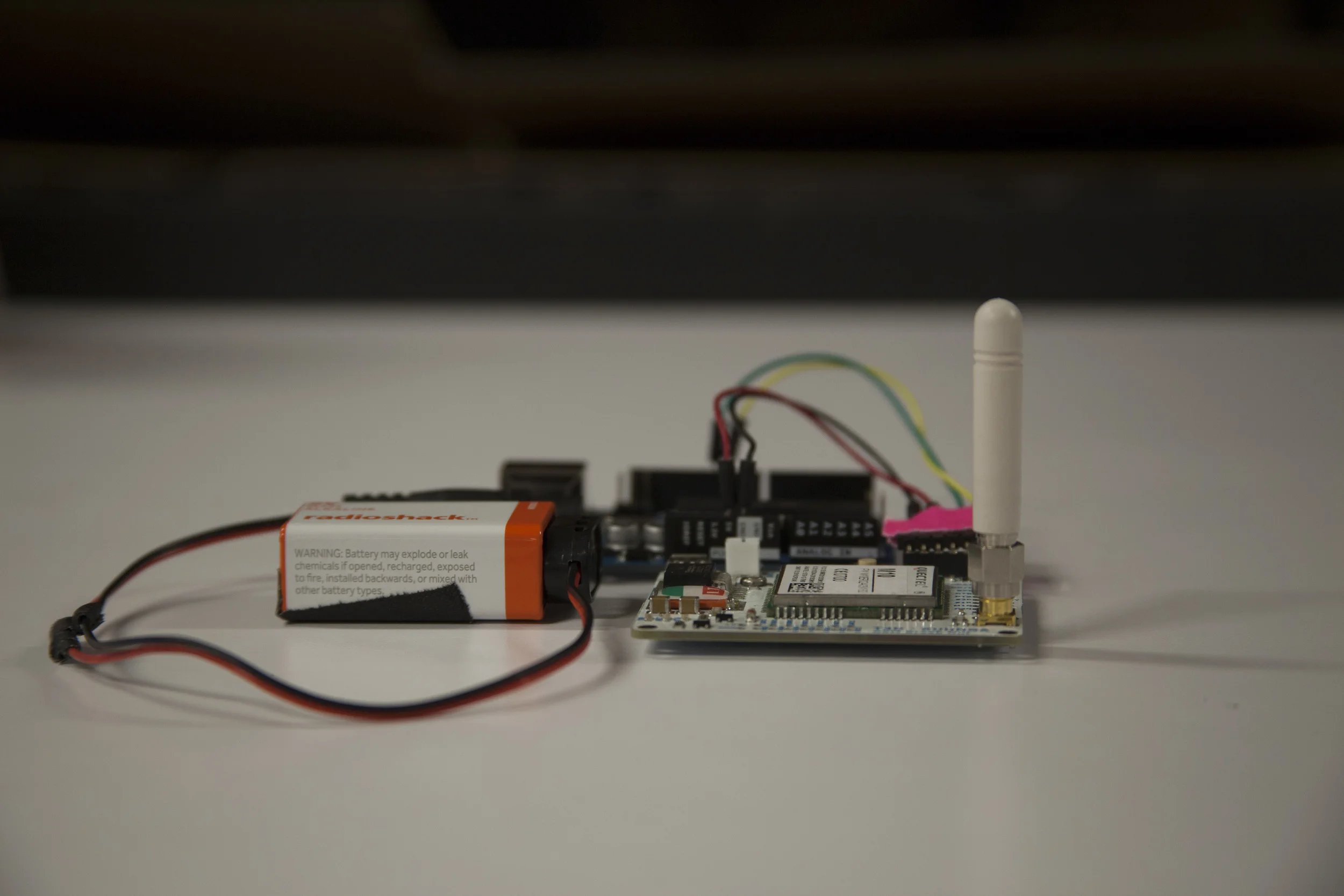

This is a project that looks to experiment with Infrared Communication between various Modules. All modules come from the same design made in Eagle CAD. It is a through-hole board routed with The Other Mill. The overall board involves an embedded Arduino (ATMega328), 2 IR-Rx, 3 IR-Tx, a LED and a Push-Button.

Modules can transfer the code from any IR Remote and transfer it among their closest peers.

Next Steps

Evaluate power consumption to figure how can they be powered through batteries.