Having in mind Mobile Devices peripherals, I set onto creating a more playful interaction. An interaction from the intersection between common gestures in the real world and Mobile Phone's accelerometer.

I've borrowed two playful gestures from daily observations the reflexive spinning and the swift juggling. Two gestures that could be technically feasible and experientially engaging.

UI Redesign

I've decided to simplify the interface towards the new experience. The accelerometer remixes the images, and a tap shows the prompt from a generated text. This text is a computational mix from the description of the objects shown on screen. This way, people can be inspired visually and textually.

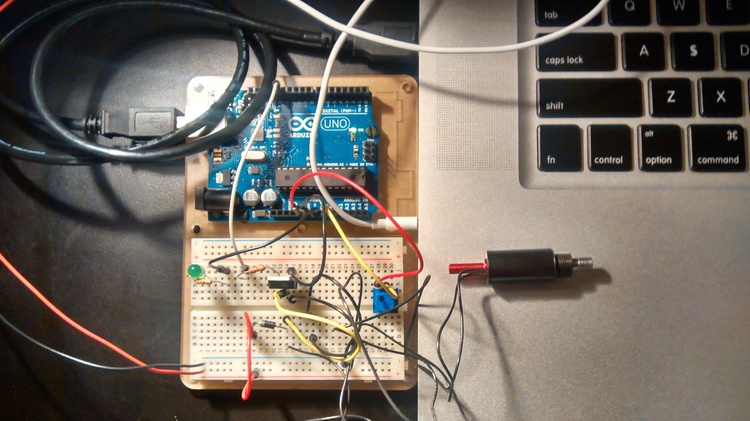

Interactive Prototype

The interactive prototype was made in Javascript using Cooper Hewitt Museum's API, a RiTa a toolkit for computational literature and the p5js library. This is where the Interactive Prototype can be experienced.

Through Javascript, I'm retrieving all the data from the Cooper Hewitt Museum including images and text from their online exhibition data base. I clean the information and select a topic, in this case, all objects in the museum related to 3D Printing.

Gestures

Turns out the spinning gesture is one of the blind spots in Phone Accelerometers. This is why, the prototype will only respond to juggling-type gestures.

Text Prompt

By retrieving the descriptions from the 3 objects shown in the screen, I create one phrase by remixing the tokens through a set of computational procedures. Every time the images shown change the tokens by which the phrases are created change.

Even though the prompted phrases have grammatical errors, embracing the computational glitchiness aligns with the overall playful and mind diverting concept of overcoming a creative block.